Table of Contents

Introduction

Amazon Simple Storage Service(S3) is one of the most used object storage services, and it is because of scalability, security, performance, and data availability. That means customers of any size or industries such as websites, mobile apps, enterprise applications, and IoT devices can use it to store any volume of data.

Amazon S3 provides easy-to-use management features so you can appropriately organize your data to fulfill your business requirements.

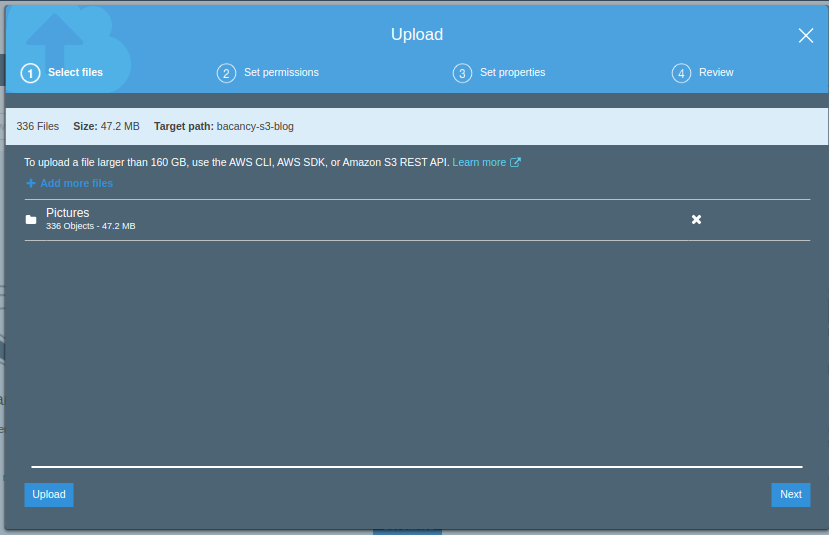

Many of us are using AWS s3 bucket on a daily basis; one of the most common challenges that are faced while working with cloud storage is syncing or uploading multiple objects at once. Yes, we can drag and drop or upload on a direct bucket page. Like the below image.

But the problem with this approach is if you’re uploading large objects over an unstable network if network errors occur you must have to restart uploading from the beginning.

Suppose you are uploading 2000+ files and you come to know that upload fails and your uploading these files from the last 1 hour, re-uploading has become a time-consuming process. So, to overcome this problem we have two solutions that we will discuss in the next sections.

Prerequisites

- AWS Account

- Installed AWS CLI

Upload Objects Using Multipart Upload API

Multipart upload opens the gate to upload a single object as a set of parts. Considering that it is possible to upload object parts independently and in any order.

In case the transmission fails in any section, it is possible to retransmit that section without affecting any other sections. So, it’s a good practice to use multipart uploads instead of uploading the object in a single operation.

Advantages of Using multipart upload:

- Improved throughput – improve uploading speed

- Fast recovery from any network issues: no need to re-upload from beginning

- Resume and pause object uploads

- It is possible to upload any object as you are creating it.

We can use multipart file uploading API with different technologies SDK or REST API for more details visit and Access your online storage like a disk and manage your data easily and conveniently with CloudMounter.

Ready to supercharge your business with AWS?

Our expert AWS consulting services are your key to seamless cloud transformation and optimization. Contact us today to embark on your journey to cost-effective scalability, top-tier security, and unmatched performance.

Copy Files to AWS S3 Bucket using AWS S3 CLI

Install AWS CLI

We need to install CLI. With the use of AWS CLI, we can perform an S3 copy operation. If you don’t know how to install CLI follow this guide: Install AWS CLI.

Configure AWS Profile

Now, it’s time to configure the AWS profile. For that, use “AWS configure” command. You can configure AWS credentials, find your credentials under IAM -> Users -> security_credentials tab on the AWS console

We are done with configuring the AWS profile. Now, you can access your S3 bucket name “bacancy-s3-blog” using the list below the bucket command

List All the Existing Buckets in S3

Use the below command to list all the existing buckets.

Copy Single File to AWS S3 Bucket

Use the below command to copy a single file to the S3 bucket.

AWS S3 Copy Multiple Files

Use the below command to copy multiple files from one directory to another directory using AWS S3.

Note: By using aws s3 cp recursive flag to indicate that all files must be copied recursively.

As you can see on the above video even if our network connection is lost or is connected after reconnecting the process goes on without losing any file.

Upload Files to S3 via GUI Desktop App – Commander One

Commander One is a versatile Amazon S3 client that allows users to access and interact with their S3 storage. You can mount S3 as a network drive and easily browse, upload, download, and manage files and folders stored on it right from CommanderCommandexr One.

The app also handles files in S3-compatible storage solutions, such as Wasabi, Minio, Ceph, DreamObjects, and Google Cloud Storage. Moreover, it supports Amazon IAM (Identity and Access Management) technology, enabling users to set up precise access controls across all client services.

In addition to Amazon S3, Commander One seamlessly integrates with other popular cloud storage services (Google Drive, OneDrive, Dropbox, etc.), as well as lets you connect to remote servers via FTP, FTPS ans SFTP protocols. This provides easy access to all your clouds and remote servers within one application.

But Commander One isn’t just a Mac Amazon S3 client, it’s also a powerful dual-pane file manager equipped with an array of features catering to various tasks. Robust search capabilities, unlimited tabs, a built-in file viewer – it includes everything you need for swift and convenient management of both local and online files. You can reveal hidden files, use the built-in Terminal, work with archives as well. For added security, the app also provides the ability to encrypt files, ensuring enhanced protection for your data.

Conclusion

So, this was about how to copy multiple files from local to AWS S3 bucket using AWS CLI. If you’re looking to enhance your AWS capabilities further, don’t hesitate to contact us. We offer AWS Consulting Services, AWS Architects, and the opportunity to hire AWS developers who can assist you in achieving your cloud computing goals.

Your Success Is Guaranteed !

We accelerate the release of digital product and guaranteed their success

We Use Slack, Jira & GitHub for Accurate Deployment and Effective Communication.